If you are in need of any of the features provided by LVM then go ahead and use it. Volume management can be an interesting addition to your block device(s) but it does add is an extra layer between you block devices and SCST. When all is setup we can start it and make sure it starts at boot. If you want smartd to email, you will need to have a minimal smtp setup like we used above with ssmtp but also the mail client which is included in mailx. I have also included the -M test switch to let smartmontools email me a test email at startup to make sure my mail subsystem is working. The -a option will do most of the basic monitoring for you, the -d specifies the device type (in my case a SATA disk) and the -m is to tell smartd to email me any issues it may find regarding this disk. Because we want to make some changes to its default configuration we can comment it and include lines like these: Smartmontools includes a daemon called smartd which will run in the background and notify you by email or script about any issues which your harddisk will have.īy default your nf will include the DEVICESCAN line which will automatically scan your system and start monitoring all SMART capable devices. SMART can tell you in an early stage when hardisks begin to fail. To keep your array healthy we can monitor our harddisks by its SMART interface. If you have your own monitoring system active, you can also let mdadm issue a script and notify it. You can monitor messages (syslog) for actions invoked by mmdadm. Ssmtp can be configured by editing /etc/ssmtp/nf MAILADDR mdadm cannot send email itself, we need to setup an sendmail (replacement) program. To receive email notifications about array issues, we need to provide our email address inside nf: It will be default monitor the array's defined in nf. Alpine Linux includes an initd script which can invoke the daemon

#Raid monitor linux software#

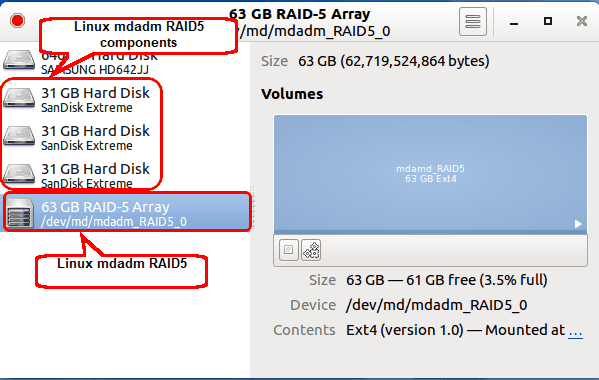

Linux software raid can be monitored with mdadm daemon option. When you are ready with your raid setup and its functioning, you will need to make sure its starting at boot time When you are happy with your raid configuration, save its information to nf fileĪRRAY /dev/md0 metadata=1.2 name=scst:0 UUID=71fc93b8:3fef0057:9f5feec1:7d9e57e8 Make sure we have raid10 module loaded at boot You can now monitor your raid (re)building: cat /proc/mdstatīy default, the rebuild speed will be set and can be checked and changed here: Mdadm -v -create /dev/md0 -level=raid10 -layout=f2 -raid-devices=4 /dev/sda /dev/sdb /dev/sdc /dev/sdd I am not using partitions on my disks, although there are reasons to use partitions, see here: Make sure we have the mdadm raid configuration tool installed

For most up-to-date information regarding Linux software raid: Raid setup Please remember with RAID10 50% of your hard disk space will go to redundancy, but performance is almost the same as RAID0 (stripe).

According to many mailing lists and the opinion of the Linux raid author, RAID10 with layout f2 (far) seems to preform best while still having redundancy. I started looking for the best performance raid level with redundancy. All LSI tools are based on glibc which will not work on uclibc hosts. There are also little to no ways to control this array from Aline Linux. Performance in degraded mode is really poor, and my users would suffer from it for too long. You can read on mailing lists this is common to this adapter so i choose not to use this kind of "hardware" raid. I have tried using their Raid10 technology, but the raid rebuilding takes more then 3 days. I'm using Dell PowerEdge R510 which includes their most basic PERC H200 raid controller. In my setup I have 4 pieces of WD RE4 1TB drives connected to a mpt2sas based controller. Note: We only provide an x86_64 kernel for SCST because it will perform better on 64bit systems. SCST modules are already included by default so there is no need for a separate module package to be installed. This is why we created a separate kernel just for SCST usage. SCST performance depends on specific patches which need to be applied to the kernel.

SCST iSCSI will run in kernel-space and this is one of the reasons why it preforms much better. The problem with this implementation is it operates in user-space.

The default Linux kernel will provide support for iSCSI.

0 kommentar(er)

0 kommentar(er)